Boost Results with Expert Guidance

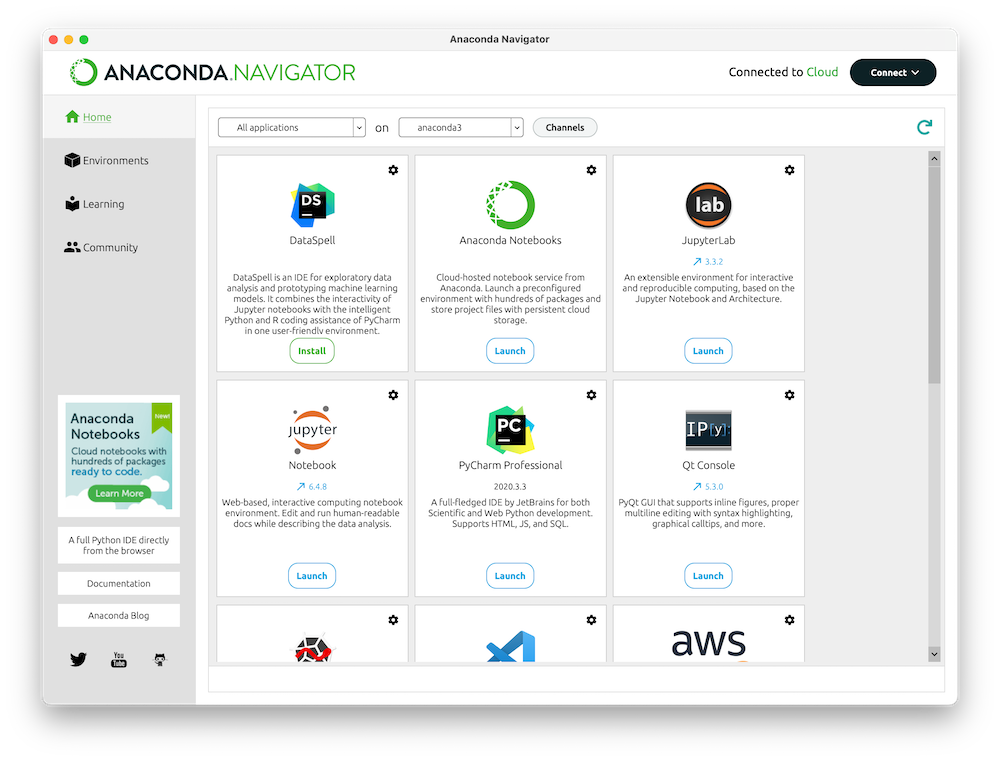

The Engine Powering Global Al Platforms

We’re not just a trusted partner; we’re the foundation for AI and data science solutions for

industry leading companies like Microsoft, IBM, and Oracle. Our packages and software

support powerful tools such as Python in Excel and a variety of advanced data platforms.

Say Goodbye to IT Roadblocks